-

Notifications

You must be signed in to change notification settings - Fork 288

Backend Overview

The Adapt Authoring Tool is an online environment for creating responsive e-learning content. At heart, the tool is a multi-tenanted, multi-user CMS and content creation tool based on the express web framework for Node.js. This document covers only the server-side aspect of the tool; the client side app, which is a Backbone.js Single Page App (SPA), is currently in the very early stages of development. Pages for the client-side app will be added as development progresses.

The core of the Authoring Tool is a node application that wraps an express server and exposes a pluggable interface for various classes of functionality. It exists in lib/application.js, though it might be more appropriately named origin.js. The main constructor is Origin and it inherits from EventEmitter. The object itself does not contain much functionality, but gathers together the subsystems, loads them and then runs the express server.

Before running the app, you need to add a config.json file, and you need have an mongodb service running.

The application is run with the command node server.js. This file simply "requires" the lib/application module, instantiates the tool and calls the start method. The start method loads the subsystems, listens for a modulesReady event, and then configures and starts the express server. Finally, a serverStarted event is fired.

Most of the modules are loaded via the authoring tool's preloadModule method. If the module exposes a preload method, it is called and passed the authoring tool instance as an argument. Each module is responsible for it's own loading behaviour, may attach itself to the authoring tool instance as an attribute, and, if the module does something asynchronously at load time, it can tell the authoring tool to wait for a moduleReady event. The authoring tool tracks the number of modules that are awaiting load, and once it has received a moduleReady event from all of those modules, the modulesReady event is fired.

For convenience, the Origin object exposes the express servers head, get, post, put, and delete methods as methods of itself. In most cases, routes should be added using the lib/rest module.

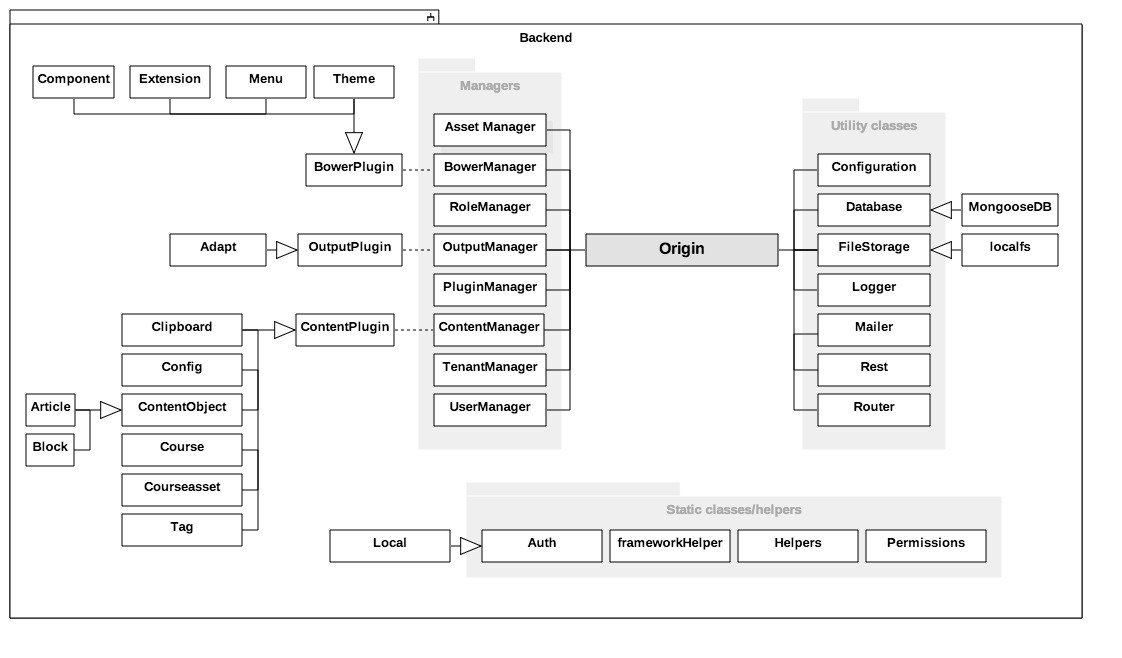

The below diagram illustrates the main units in the backend, as well as their relationship with the central Origin object. Straight lines denote stored references (i.e. variables), dotted lines represent definitions (i.e. objects defined within the 'parent'), and 'arrow-ed' lines show inheritance.

The structure of the project looks like this:

- conf/ # configuration files here

- lib/ # main subsystems here

- contentSchema/ # schemas for content types here (course.schema, article.schema, component.schema etc)

- dml/ # database plugins here

- schema/ # core database schema here

- plugins/ # all plugins here, categorized by type

- auth/ # authentication plugins

- local/ # passport-local implementation

- filestorage/ # filestorage plugins

- localfs/ # local filesystem storage implementation

- test/ # sets up plugin unit tests, all plugins should have their own test/ directory

- test/ # core unit tests

The important pluggable types are:

The primary subsystems are:

The database module is defined in lib/database.js and differs from the other pluggable types in that it must be configured in the config.json file prior to running the server. For this reason, database plugins don't live in the plugins/ directory with all the other plugin types, but are kept in lib/dml/. Currently, only one database plugin exists - the mongoose.js plugin. Mongoose style schema documents are used to define new entity types - the database plugin will read the lib/dml/schema/ directory and load any schemas defined in there, but any plugin can also add a schema to the database by calling db.loadModel.

Presently, the database interface consists of only a few CRUD methods. This is expected to expand.

The authentication module defines what an authentication plugin looks like (the AuthPlugin prototype) and sets up the routes /api/login, /api/register, and /api/authcheck. All of these routes delegate their implementation the the currently active auth plugin. Auth plugins are found under plugins/auth/ and should override the methods init, authenticate, and registerUser. The local auth plugin is a straightforward example.

The auth module also exposes the hashPassword and validatePassword methods; the bcrypt-nodejs module is used for hashing. This module is a javascript implementation, since it can be a little difficult to use the standard bcrypt module on Microsoft Windows. Alternatively, we could revert to using the standard bcrypt module and fall back to another crypto method if the dependencies are not available.

The FileStorage module exposes methods that allow for the writing, moving, deleting of files and directory. Most of the methods are fairly low level. The data passed in and out of filestorage methods are typically represented by Nodejs Buffer objects. There is presently one FileStorage plugin type in plugins/filestorage/localfs/ that allows writing to the local filesystem. Any client can get an instance of a filestorage plugin as follows:

var filestorage = app.filestorage;

filestorage.getStorage('localfs', function (error, localfs) {

if (!error) {

// do something with localfs

}

});

A filestorage plugin should return valid stream objects from the createWriteStream and createReadStream methods, or at least an object which conforms to the nodejs stream object interface

Content plugins represent not only the content object types found in the framework (Content Object, Article, Block, Config etc.), but also some data-types that are used internally to the system such as tags and clipboard data.

Output plugins are used to allow exporting or importing of content in popular formats. The most common output, and the first plugin, will of course be the Adapt Output Framework, but we forsee the possibility of other output plugins, such as export to word doc, pdf, or plaintext.

The interface so far consists of two important methods "Publish" and "Export" that should be implemented in all output plugins. In the case of the Adapt Output plugin, "Publish" allows users to download a zipped package compatible with most major LMSes or to preview their work live in the browser, while "Export" allows users to download a zip containing all source code for a course, thus allowing standalone development using Adapt's command-line tools.

Users, tenants and permissions are closely related, in that each user (with the exception of super admin) belongs exclusively to a tenant. Users are uniquely identified by email. In future, we may allow users to jump between tenants in an mnet like manner. The usermanager is mainly responsible for user CRUD, and also exposes serialize/deserialize methods to be used by passportjs for managing authenticated user sessions.

New users should not generally be created by using the methods of this module directly, but should be created using an authentication plugin instead. These plugins should take care of mapping a user to a tenant and may also perform other necessary tasks.

Tenants are groupings of entities and are separated from each-other via the use of an individual database per tenant. When a user logs in to the system, their email is checked against the global user collection, their tenant is determined and their tenant information is saved in the session, which is used by the database module to determine the context in which queries are made. Further information on the multi-tenancy approach can be found here. Presently this subsystem is really only used for CRUD. REST api routes may be added here in future.

The ContentManager module should be used for CRUD of courses and course content. Presently, it scans the for content schemas (plugins/content) during startup, and adds the schema it finds there to the database. The module adds routes to the REST api for CRUD of these schemas.

Adding a new content type is as simple as adding a new schema to the above directory and restarting the app. For example, I might add a schema for managing foo objects:

# foo schema

{

"name": {

"type": "String",

"required": "true",

"default": "Untitled"

},

"description": {

"type": "String"

},

"dateCreated": "Date",

"dateModified": "Date"

}

A client can then create a new foo with a POST request to http://hostname/api/content/foo with an appropriate json payload. You can retrieve a single foo with a GET request to http://hostname/api/content/foo/id or retrieve all foos with a GET request to http://hostname/api/content/foos. Updates and deletes are achieved with requests to http://hostname/api/foo/id using PUT and DELETE respectively.

Permissions are used to allow/deny a user certain actions in relation to a resource or a tenant. Permissions are described in policy documents that look very like Amazon's Access Policy Language. A broad-phase permission assessment is made when a request is made to a particular URL, but router functions may also use the permissions module to do more fine grained checking.

The permissions module contains a middleware function to determine if the currently authenticated user has access to a particular resource. The resource is inferred from the URL and the action is inferred from the HTTP method. If the user does not have the required access, a 403 Forbidden response results.

Finer-grained checking can be performed by requiring the permissions module and using the hasPermission method:

permissions.hasPermission(userid, 'actionType', permissions.buildResourceString(tenantid,'/api/projects'), function (error, allowed) {

// do something if allowed is true

});

The rest module is used to attach routes to the rest api. This is how other components should typically add a service for the Backbone app. When adding a route, '/project/users' for example, the rest module will prepend the current api url to the route, so the actual endpoint will be 'http://hostname/api/project/users'.

Tagging gives us a way of grouping various resources together. This can be used to facilitate searching, but we also envision that grouping together users and setting permissions for those groupings will also be possible. We envision that entities are linked to tags with a resource string identical to those used in the policy statements used by the permissions module.