Collect links to profiles by username through 10+ search engines (see the full list below).

Idea by Cyber Detective

Features:

- multiple engines

- proxy support

- CSV file export

- plugins

- pdf metadata extraction

- social media info extraction

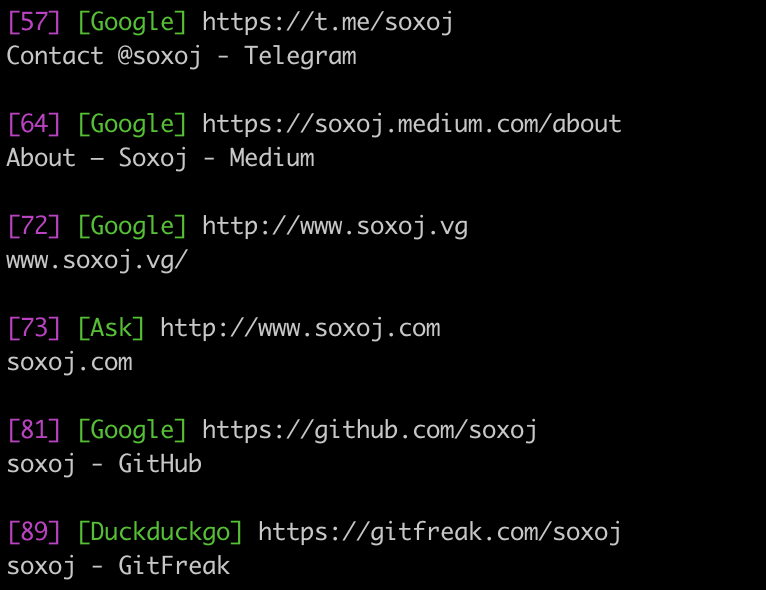

./marple.py soxoj

https://t.me/soxoj

Contact @soxoj - Telegram

https://github.com/soxoj

soxoj - GitHub

https://coder.social/soxoj

soxoj - Coder Social

https://gitmemory.com/soxoj

soxoj

...

PDF files

https://codeby.net/attachments/v-0-0-1-social-osint-fundamentals-pdf.45770

Social OSINT fundamentals - Codeby.net

/Creator: Google

...

Links: total collected 111 / unique with username in URL 97 / reliable 38 / documents 3

Advanced usage:

./marple.py soxoj --plugins metadata

./marple.py smirnov --engines google baidu -v

All you need is Python3. And pip. And requirements, of course.

pip3 install -r requirements.txt

You need API keys for some search engines (see requirements in Supported sources). Keys should be exported to env in this way:

export YANDEX_KEY=key

You can specify 'junk threshold' with option -t or --threshold (default 300) to get more or less reliable results.

Junk score is summing up from length of link URL and symbols next to username as a part of URL.

Also you can increase count of results from search engines with option --results-count (default 1000). Currently limit is only applicable for Google.

Other options:

-h, --help show this help message and exit

-t THRESHOLD, --threshold THRESHOLD

Threshold to discard junk search results

--results-count RESULTS_COUNT

Count of results parsed from each search engine

--no-url-filter Disable filtering results by usernames in URLs

--engines {baidu,dogpile,google,bing,ask,aol,torch,yandex,naver,paginated,yahoo,startpage,duckduckgo,qwant}

Engines to run (you can choose more than one)

--plugins {socid_extractor,metadata,maigret} [{socid_extractor,metadata,maigret} ...]

Additional plugins to analyze links

-v, --verbose Display junk score for each result

-d, --debug Display all the results from sources and debug messages

-l, --list Display only list of all the URLs

--proxy PROXY Proxy string (e.g. https://user:[email protected]:8080)

--csv CSV Save results to the CSV file

| Name | Method | Requirements |

|---|---|---|

| scraping | None, works out of the box; frequent captcha | |

| DuckDuckGo | scraping | None, works out of the box |

| Yandex | XML API | Register and get YANDEX_USER/YANDEX_KEY tokens |

| Naver | SerpApi | Register and get SERPAPI_KEY token |

| Baidu | SerpApi | Register and get SERPAPI_KEY token |

| Aol | scraping | None, scrapes with pagination |

| Ask | scraping | None, scrapes with pagination |

| Bing | scraping | None, scrapes with pagination |

| Startpage | scraping | None, scrapes with pagination |

| Yahoo | scraping | None, scrapes with pagination |

| Mojeek | scraping | None, scrapes with pagination |

| Dogpile | scraping | None, scrapes with pagination |

| Torch | scraping | Tor proxies (socks5://localhost:9050 by default), scrapes with pagination |

| Qwant | Qwant API | Check if search available in your exit IP country, scrapes with pagination |

$ python3 -m pytest tests- Proxy support

- Engines choose through arguments

- Exact search filter

- Engine-specific filters

- 'Username in title' check

Sector035 - Week in OSINT #2021-50

OS2INT - MARPLE: IDENTIFYING AND EXTRACTING SOCIAL MEDIA USER LINKS