-

Notifications

You must be signed in to change notification settings - Fork 20

Home

Watson-Alex, your audio assistant

Use microphone to check the weather or have a conversation to assistant bot with Watson cognitive technologies

IoT Audio Cognitive

The idea behind this project is to provide a hands-on fun workshop using Node-RED and Watson's cognitive solutions for audio conversations. Audio tends to have its own challenges and this step-by-step tutorial hopefully can help you enjoy coding and also target your goals through this experience.

This pattern uses the Car Dashboard Conversation workspace that comes by default when you create the Assistant (formerly Conversation) service. The reason is to avoid complications but you can always create or import your own conversation workspace, this might be a good idea to do a separate tutorial once this pattern gets consumed easily by developers.

After the completion of this tutorial, this application will record your talk and send it over to Watson's services to retrieve, based on your request, the weather data of a city.. Or can send commands and receive responses through a conversation, for example, to turn on lights or to play a music and many more.

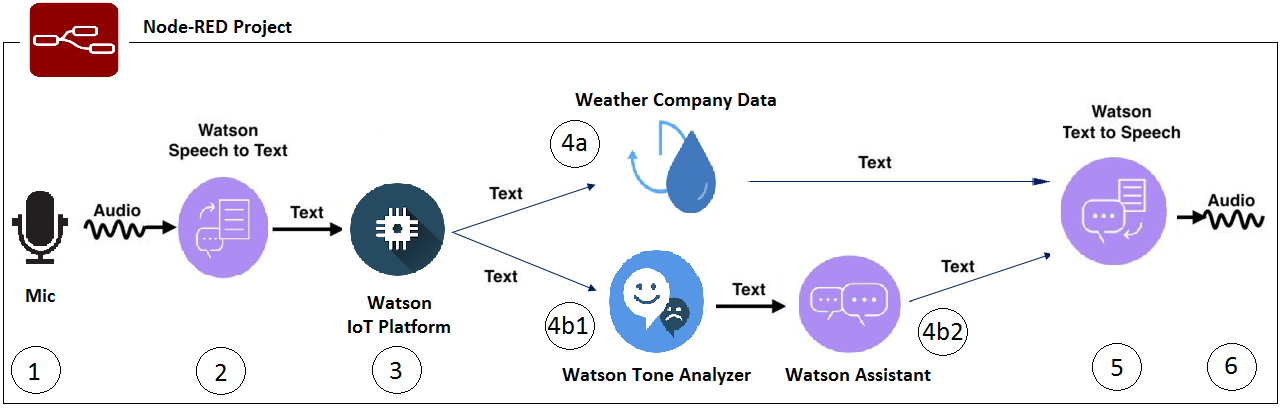

This tutorial will help you create an application that takes in an audio by recording your speech, then sends it over to convert this speech into a text. The Internet of Things platform receives this text and assigns it to an existing device, which in its turn, it sends it back to be checked whether it's a weather or commands requests.

After this decision, if it's a weather request then the text will be sent to the Weather Data Company to pull the answer and be sent to convert this payload to a speech and then to the audio player to read it loud from the laptop's speakers. If it was a command, the tone analyzer will take in this text to analyze it then send to the Assistant node to perform on this request by going through the conversation workspace and based on that will respond back: if it's something not designed to do, the conversation dialog will read out the error saying that it doesn't handle this request, or else, if it's the correct command then it will take the action and read out through the audio player that it performed the request.

You can always choose the voice, the language and the gender of the reader for this pattern's Assistant audio reader.

There's a lot more into it, coding is always fun specially with Node-RED. Looking at this project and seeing the amount of Watson services that are included, one can appreciate the existence of Node-RED.

-

Audio will be recorded and be converted to a text through Speech-to-Text

-

The text is sent to the Internet of Things Platform and be associated to a device

-

The text will be received back from the IoT Platform and checked if there's a request of weather data (4a) or send commands through the conversation (4b1 and 4b2)

4a. Weather insights will send the weather data information of the mentioned city and then be sent as a text to the Text-to-Speech

OR

4b1. For commands received, it will be sent to the tone analyzer

4b2. From the tone analyzer, the text will continue to be processed in the assistant node and then sent to the Text-to-Speech

-

The Text-to-Speech will receive the payload and will be sent to the audio player

-

The audio player will read out loud the final text

- IBM Cloud

- IBM Cloud Documentation

- IBM Cloud Developers Community

- IBM Watson Internet of Things

- IBM Watson IoT Platform

- IBM Watson IoT Platform Developers Community

- [Node-RED]

- [Speech-To-Text]

- [Text-To-Speech]

- [Weather Insights]

- [Tone Analyzer]

- [Conversation]

- [Internet of Things]

Related links: