-

Notifications

You must be signed in to change notification settings - Fork 28

Home

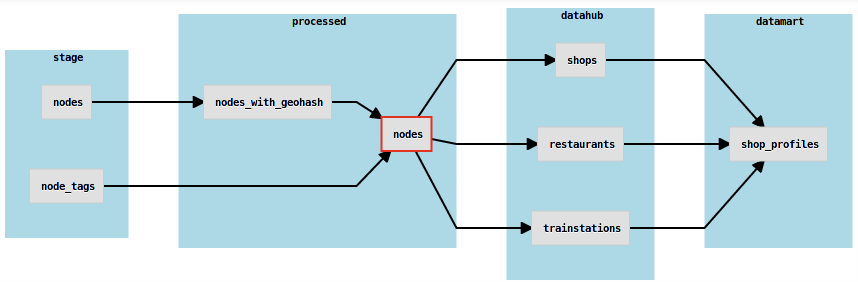

Schedoscope is a scheduling framework for agile development, testing, (re)loading, and monitoring of your Hadoop data warehouse.

Schedoscope makes the headache go away you are certainly going to get when frequently having to rollout and retroactively apply changes to computation logic and data structures in your data warehouse with traditional ETL job schedulers such as Oozie.

Scheduling with Schedoscope is based on three principles:

-

Goal orientation: with Schedoscope, you specify the views you want and the scheduler takes care that the corresponding data are loaded.

-

Self-sufficiency: Schedoscope has all information about views available: structure, dependencies, transformation logic. The scheduler thus can start out from an empty metastore and create all tables and partitions as data are loaded. Also, metadata management and lineage tracing is trivially as data structure and dependencies are explicitly specified.

-

Reloading is loading: Schedoscope implements measures to automatically detect changes to view structure and computation logic; as it is self-sufficient, it can then automatically recompute potentially outdated views.

Get a glance of what Schedoscope does for you:

Build it:

[~]$ git clone https://github.com/ottogroup/schedoscope.git

[~]$ cd schedoscope

[~/schedoscope]$ MAVEN_OPTS='-Xmx1G' mvn clean install

Follow the Open Street Map tutorial to install and run Schedoscope in a standard Hadoop distribution image:

Read the View DSL Primer for more information about the capabilities of the Schedoscope DSL:

Read more about how Schedoscope actually performs its scheduling work:

Check out Metascope! It's an add-on to Schedoscope for collaborative metadata management, data discovery and exploration, and data lineage tracing:

We have released Version 0.9.0 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This release upgrades Spark transformations from Spark version 1.6.0 to Spark version 2.2.0 based on Cloudera's CDH 5.12 Spark 2.2 beta parcel. As a consequence, Schedoscope has been lifted to Scala 2.11 and JDK8 as well.

This is an incompatible change likely requiring adaptation of Spark jobs, dependencies, and build pipelines of existing Schedoscope projects - hence the incrememtation of the minor release number.

We have released Version 0.8.9 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This release contains the following enhancements and changes:

- Cloudera client libraries updated to CDH-5.12.0;

- a DistCp transformation for view materialization by parallel, cross-cluser file copying;

- a new development mode setup that helps developers to easily copy data from a production environment to the direct dependencies of the view they are developing;

- shell transformations had to be moved back into

schedoscope-coreto facilitate development mode; - a versioning issue with the Scala Maven compiler plugin with regard to Scala 2.10 was fixed so that finally Schedoscope compiles and runs under JDK8 as well.

We have released Version 0.8.7 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This version contains a critical Metascope bugfix introduced with the last version preventing startup. Also, finally Metascope field lineage documentation has been provided in the View DSL Primer and the Metascope Primer.

We have released Version 0.8.6 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This version includes support for field level data lineage - automatically inferred from Hive transformations, declaratively specifyable for other transformations - in Metascope. Also, Metascope lineage graph rendering has been reworked. Extensive documentation to come.

Schedoscope now fails immediately if a driver specified in schedoscope.conf cannot be found on the classpath.

We have released Version 0.8.5 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This version adds support for float view fields to JDBC exports

We have released Version 0.8.4 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This version removes a race condition the file system driver initialization that seems to have been introduced with CDH-5.10. Also, we have changed the way how we delete and recreate output folders for Map/Reduce transformations to avoid Hive partitions pointing to temporarily non-existing folders.

We have released Version 0.8.3 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This version has been built against Cloudera's CDH 5.10.1 client libraries. The test framework no longer artificially sets the storage formats of views under test to text, making testing of Spark jobs writing Parquet files simpler. The robustness of the Schedoscope HTTP service has been improved in face of invalid view parameters.

We have released Version 0.8.2 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This version provides significant performance improvements when initializing the scheduling state for a large number of views.

We have released Version 0.8.1 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This fixes a critical bug that could result in applying commands to all views in a table and not just the ones addressed. Do not use Release 0.8.0

We have released Version 0.8.0 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

Schedoscope 0.8.0 includes, among other things:

- significant rework of Schedoscope's actor system that supports testing and uses significantly fewer actors reducing stress for poor Akka;

- support for a lot more Hive storage formats;

- definition of arbitrary Hive table properties / SerDes;

- stability, performance, and UI improvements to Metascope;

- the names of views being transformed appear as the job name in the Hadoop resource manager.

Please note that Metascope's database schema has changed with this release, so back up your database before deploying.

We have released Version 0.7.1 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

This release includes a fix removing bad default values for the driver setting location for some transformation types. Moreover, it now includes the config setting schedoscope.hadoop.viewDataHdfsRoot which allows one to set a root folder different from /hdp for view table data without having to register a new dbPathBuilder builder function for each view.

Spark transformations, finally! Build views based on Scala and Python Spark 1.6.0 jobs or run your Hive transformations on Spark. Test them using the Schedoscope test framework like any other transformation type. HiveContext is supported.

We have also upgraded Schedoscope's dependencies to CDH-5.8.3. There is catch, though: we had to backport Schedoscope 0.7.0 to Scala 2.10 for compatibility with Cloudera's Spark 1.6.0 dependencies.

We have released Version 0.7.0 as a Maven artifact to our Bintray repository (see Setting Up A Schedoscope Project for an example pom).

(more)