+

+

+  +

+

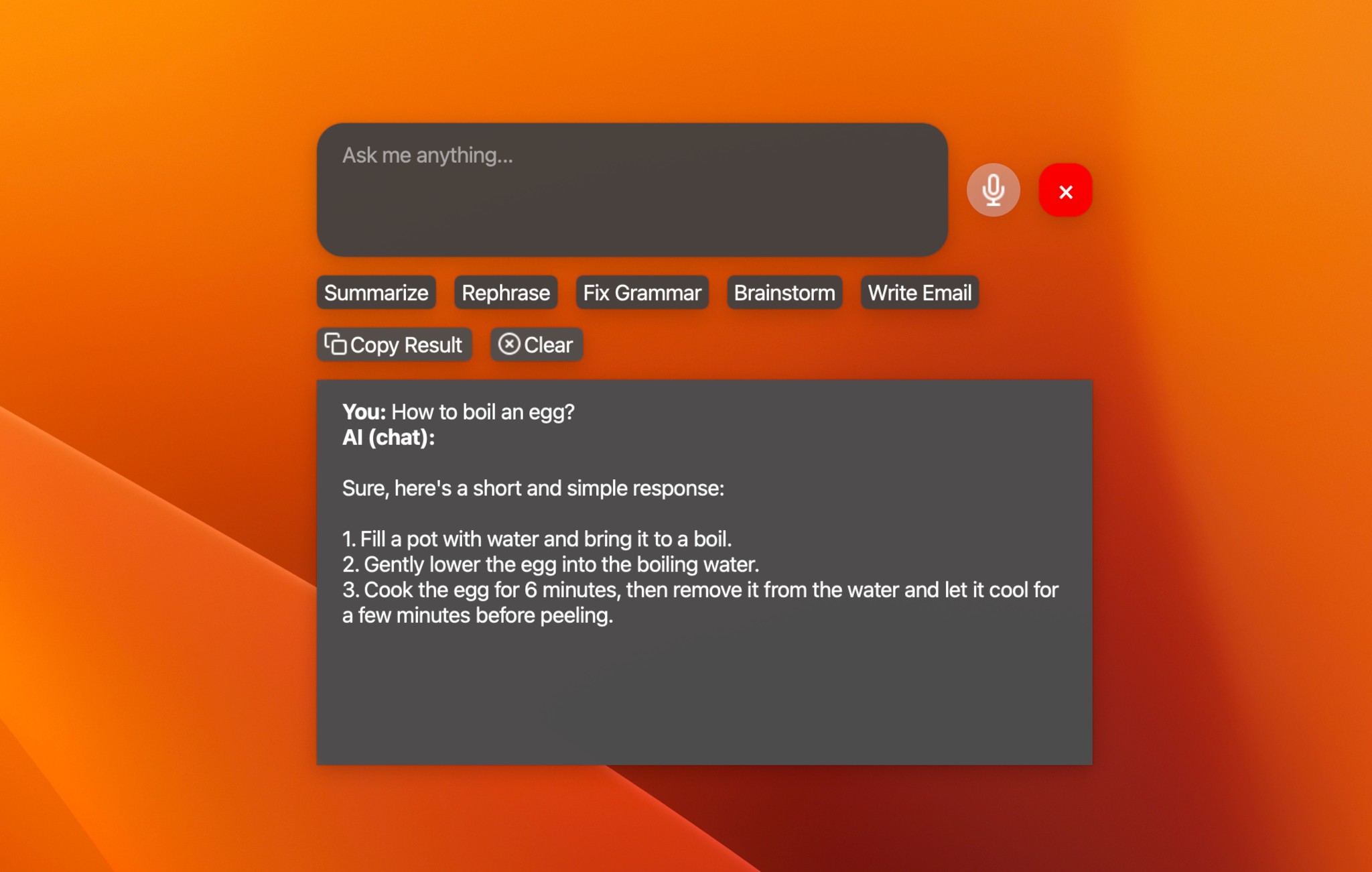

Your Local AI Assistant with Llama Models

+ - + + +    + + + A simple AI-powered assistant to help you with your daily tasks, powered by Llama 3.2. It can recognize your voice, process natural language, and perform various actions based on your commands: summarizing text, rephasing sentences, answering questions, writing emails, and more. This assistant can run offline on your local machine, and it respects your privacy by not sending any data to external servers. -**Only support macOS for now. Windows and Linux support are coming soon.** -  ## Features @@ -25,7 +32,7 @@ This assistant can run offline on your local machine, and it respects your priva ## Technologies Used --  +-  -  -  -  @@ -53,6 +60,32 @@ pip install llama-assistant pip install -r requirements.txt ``` +**Speed Hack for Apple Silicon (M1, M2, M3) users:** 🔥🔥🔥 + +- Install Xcode: + +```bash +# check the path of your xcode install +xcode-select -p + +# xcode installed returns +# /Applications/Xcode-beta.app/Contents/Developer + +# if xcode is missing then install it... it takes ages; +xcode-select --install +``` + +- Build `llama-cpp-python` with METAL support: + +```bash +pip uninstall llama-cpp-python -y +CMAKE_ARGS="-DGGML_METAL=on" pip install -U llama-cpp-python --no-cache-dir +pip install 'llama-cpp-python[server]' + +# you should now have llama-cpp-python v0.1.62 or higher installed +llama-cpp-python 0.1.68 +``` + ## Usage Run the assistant using the following command: diff --git a/llama_assistant/_version.py b/llama_assistant/_version.py deleted file mode 100644 index 9ad9824..0000000 --- a/llama_assistant/_version.py +++ /dev/null @@ -1,16 +0,0 @@ -# file generated by setuptools_scm -# don't change, don't track in version control -TYPE_CHECKING = False -if TYPE_CHECKING: - from typing import Tuple, Union - VERSION_TUPLE = Tuple[Union[int, str], ...] -else: - VERSION_TUPLE = object - -version: str -__version__: str -__version_tuple__: VERSION_TUPLE -version_tuple: VERSION_TUPLE - -__version__ = version = '0.1.dev1+gcceb82b.d20240927' -__version_tuple__ = version_tuple = (0, 1, 'dev1', 'gcceb82b.d20240927') diff --git a/llama_assistant/resources/__init__.py b/llama_assistant/resources/__init__.py new file mode 100644 index 0000000..e69de29 diff --git a/logo.png b/logo.png new file mode 100644 index 0000000..b58daf0 Binary files /dev/null and b/logo.png differ diff --git a/pyproject.toml b/pyproject.toml index 0be492b..53970d4 100644 --- a/pyproject.toml +++ b/pyproject.toml @@ -4,7 +4,7 @@ build-backend = "setuptools.build_meta" [project] name = "llama-assistant" -version = "0.1.10" +version = "0.1.12" authors = [ {name = "Viet-Anh Nguyen", email = "vietanh.dev@gmail.com"}, ] @@ -31,6 +31,7 @@ dependencies = [ "llama-cpp-python", "pynput", "SpeechRecognition", + "huggingface_hub", ] dynamic = [] diff --git a/requirements.txt b/requirements.txt index 542f13d..a093266 100644 --- a/requirements.txt +++ b/requirements.txt @@ -2,4 +2,5 @@ PyQt6==6.7.1 SpeechRecognition==3.10.4 markdown==3.7 pynput==1.7.7 -llama-cpp-python \ No newline at end of file +llama-cpp-python +huggingface_hub==0.25.1 \ No newline at end of file