-

Notifications

You must be signed in to change notification settings - Fork 16

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

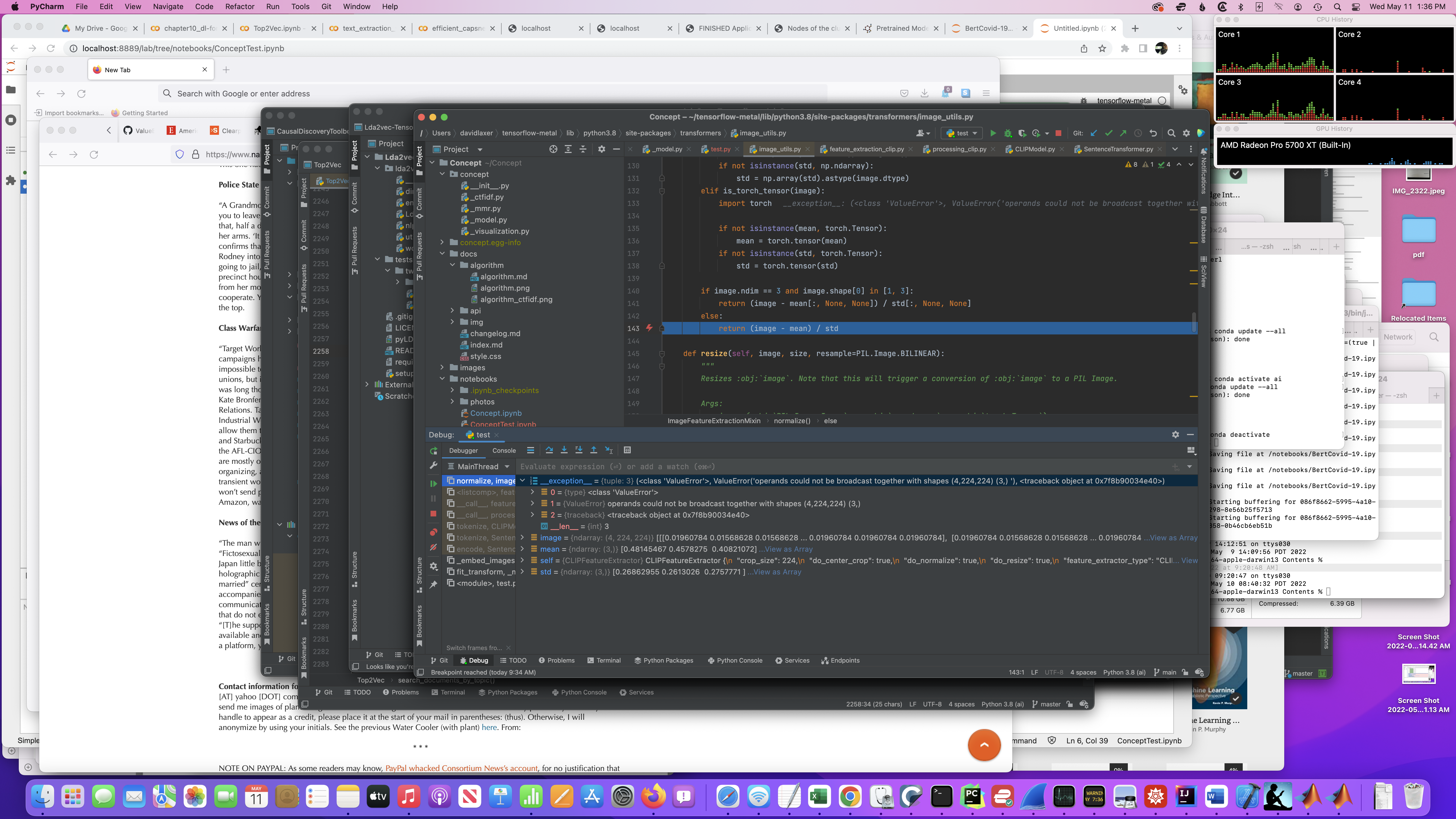

ValueError: operands could not be broadcast together with shapes (4,224,224) (3,) #12

Comments

|

That indeed does sound familiar. I can remember having trouble with some of the images when starting out developing the packages but I cannot find the code for fixing this. I believe you are indeed correct that some of the images are either corrupted or in a different format. I would have to do some digging but it indeed seems that this might not be the best dataset to be used unless some images are removed... |

|

Not an elegant solution ... but it was quick: |

|

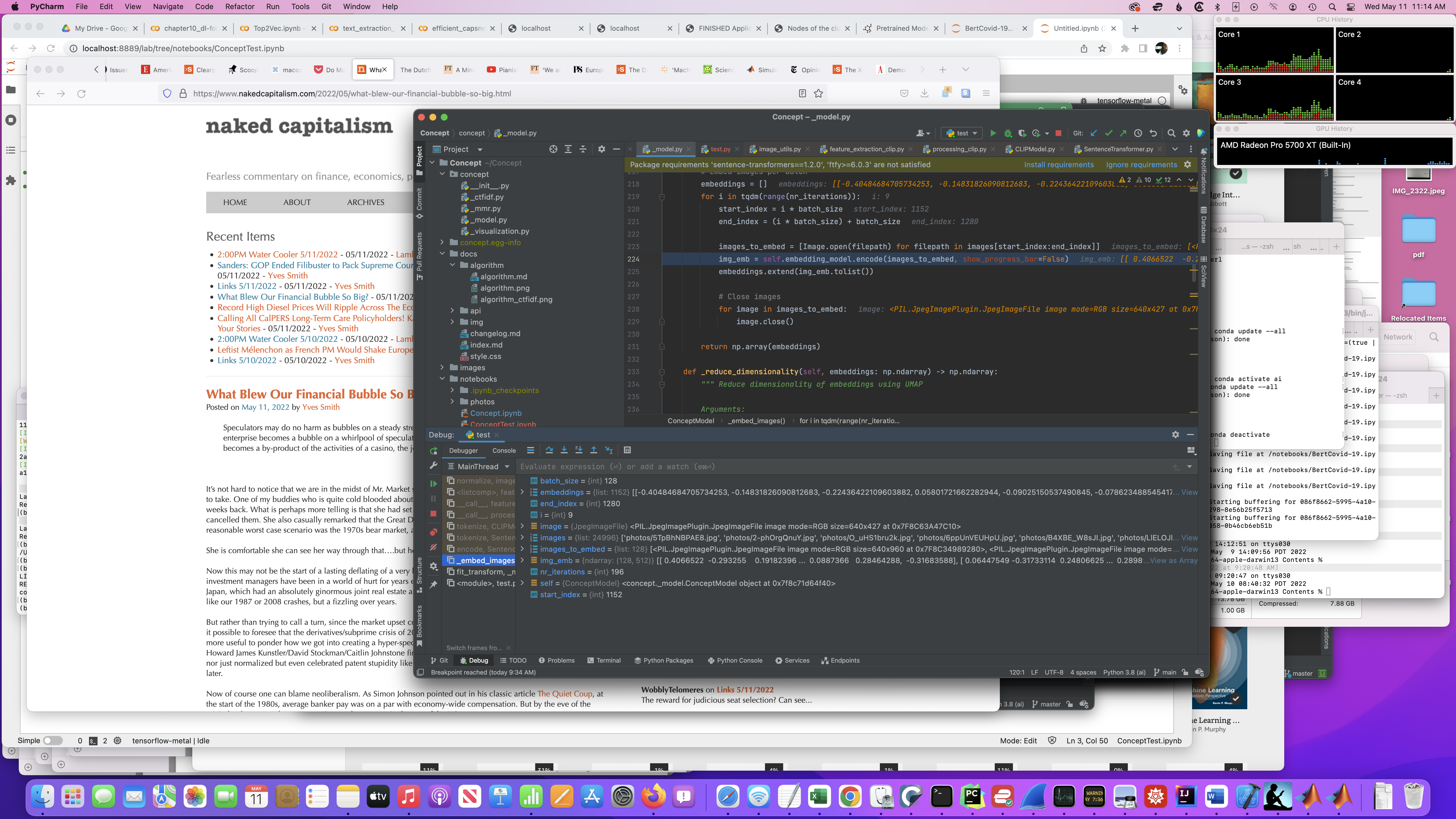

I just tried out the following code in a Kaggle session and I had no issues: import os

import glob

import zipfile

from tqdm import tqdm

from sentence_transformers import util

from concept import ConceptModel

# 25k images from Unsplash

img_folder = 'photos/'

if not os.path.exists(img_folder) or len(os.listdir(img_folder)) == 0:

os.makedirs(img_folder, exist_ok=True)

photo_filename = 'unsplash-25k-photos.zip'

if not os.path.exists(photo_filename): # Download dataset if does not exist

util.http_get('http://sbert.net/datasets/' + photo_filename, photo_filename)

# Extract all images

with zipfile.ZipFile(photo_filename, 'r') as zf:

for member in tqdm(zf.infolist(), desc='Extracting'):

zf.extract(member, img_folder)

img_names = list(glob.glob('photos/*.jpg'))

# Train model

concept_model = ConceptModel()

concepts = concept_model.fit_transform(img_names)It might mean that something indeed went wrong when trying to load the images in your environment.

Concept uses UMAP, which is stocastisch by natures, which means that every run you will get different results. So comparing outputs is not easily done unless you set a random_state in UMAP which might hurt performance. |

Indeed, this is quite strange as it is working perfectly fine for me in a Kaggle session with the following packages: transformers==4.15.0

tokenizers==0.10.3

sentence-transformers==1.2.0

numpy==1.20.3Hopefully, the issue you posted on the CLIP page gives a bit more insight. |

Running a Concept example on OS S Monterey 12.3.1

...Transformers/Image_utils #143:

return (image - mean) / std

image is (4,224,224)

mean is (3,)

std is (3,)

Here's the code:

The exception is in the normalize() function ... I believe in the 9th Pil image:

The text was updated successfully, but these errors were encountered: